It seems these days that DDoS is moving more and more toward what is known as “application level DDos”. While network level DDoS is made of nonsense packets (like spoofed ICMP packets, or a flood of SYN TCP packets), which are not intended to complete any meaningful transaction, application level DDoS is made of real requests for the service, and that’s exactly what makes it so problematic.

Overcoming a network level attack is typically about having enough CPU and bandwidth. The content of the attack itself is meaningless. However, when the attack is made up of seemingly innocent requests, defense is not trivial – and hackers are well aware of this. All it takes are just a few thousand requests per second to kill most of today’s application stacks, just an order of magnitude more, and even the web servers themselves buckle. In fact, just two or three sources can kill a mammoth Linux server, because no matter how impressive the server spec is, if the request is served by LAMP, the load on the database and the scripting language is sufficient to lead to the eventual take down of the server (around 5-10K rps max). The client is simple: it opens a connection, sends a request to a single URL, and repeats this Ad infinitum.

In this type of attack, the attacker needs to open real TCP connections — so they can actually be blocked effectively (as opposed to spoofed ICMP packets, which you don’t really know where they are coming from).

The most common advice sys-admins are given about preventing DDoS revolves around blocking the offending IPs. This starts out simple, but when it gets to 6000 concurrent IPs, manual configuration is simply not enough, and tools such as mod_deflate which are supposed to automate these actions are insufficient.

There are a few problems here: One is that even if everything does work, it takes time to map the whole botnet, and until then, the service is probably unavailable. Moreover, we’ve seen botnets that rate-limit the traffic coming out of a single IP address, as well as use HTTP/1.1 to reuse connections, and that makes this technique even less effective.

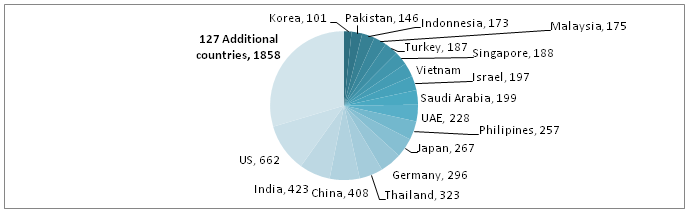

Some people even go further and suggest that you block a whole geo-region (such as APAC) using IP ranges. From our experience, if you are willing to accept some collateral damage, white-listing can be an effective method. Below you can see an example of positively identified agents in a medium attack (6000 concurrent bots); if we were to white list the United States, we’d reduce the traffic by 90%. Of course, for white-listing, a very granular analysis of IP addresses is needed.

Another common approach is to identify common patterns in the traffic, and block, based on that. For example: a particular URL, a particular User-Agent, etc.

While all of these are sometimes effective, the problem is that as botnets evolve (rapidly), they invest more and more in obscuring their behavior, by breaking patterns.

Here are two examples of recent botnets that we dealt with:

| **Botnet A** | **Botnet B** | |

| **No. of concurrent sources** | **2,000-3,000** | **7,000-8,000** |

| **Bot’s request pattern** | 500 requests, and then the bot goes silent (it takes about 2 minutes per rotation) | 200-300 requests per cycle, at a slow pace (around 3-4 minutes per cycle), with a second wait- time between requests. |

| **Connection maintenance technique** | bots reuse the same connection using HTTP/1.1 (so every bot opens 1-2 connections only | The bots attempt to make the requests as memorable as possible for the Web Server, using empty incomplete POST requests, and HTTP/1.1 keep alive. |

| **HTTP Usage** | The bots maintains user sessions and accepted server cookies. | The bots use a dictionary of hundreds of existing User Agents with target URLs changing over time. |

So what is the answer to the huge, attribute-mutating, rate-limiting, session-keeping bunch of bots?

Here are some of our conclusions:

- Even though all attackers gravitate towards application level attacks, botnets are still capable of all the traditional network attacks. So it is essential to be completely scalable and agile. This means that the network should be able to handle many gigabits of load and distribute it effectively.

- Once an IP was identified as part of the botnet, blocking it either directly or at the upstream provider is crucial.

- The only way to stop an application layer attack is at the application layer. You have to distinguish between humans and bots — think CAPTCHA. Although putting every web site visitor in a bad mood is making the DDoS at least partially successful, there are many less intrusive tests (most bots don’t support cookies, let alone Javascript, DOM, and many other behaviors), and you don’t have to subject everyone to these, just the usual suspects.

For those who attempt to brave the attacks themselves: to some degree, all of these methods mentioned above can be implemented on a DIY basis. You can also read a great write-up about guerilla methods for defeating network attacks here.

Try Imperva for Free

Protect your business for 30 days on Imperva.