The annual Imperva Incapsula Bot Traffic Report, now in its fifth year, is an ongoing statistical study of the bot traffic landscape. For our latest report we examined 16.7+ billion visits to 100,000 randomly-selected domains on the Incapsula network to tackle the following questions:

- How much website traffic is generated by bots?

- How are bad bots used in cyberattacks?

- What drives good bot visits to various websites and services?

- Which are the most active bad and good bots?

The answers to these questions are found in the infographic below. In the accompanying commentary we take a closer look at some of the macro trends, as reflected in the activity of the most active bot archetypes.

Most Website Visitors are Bots

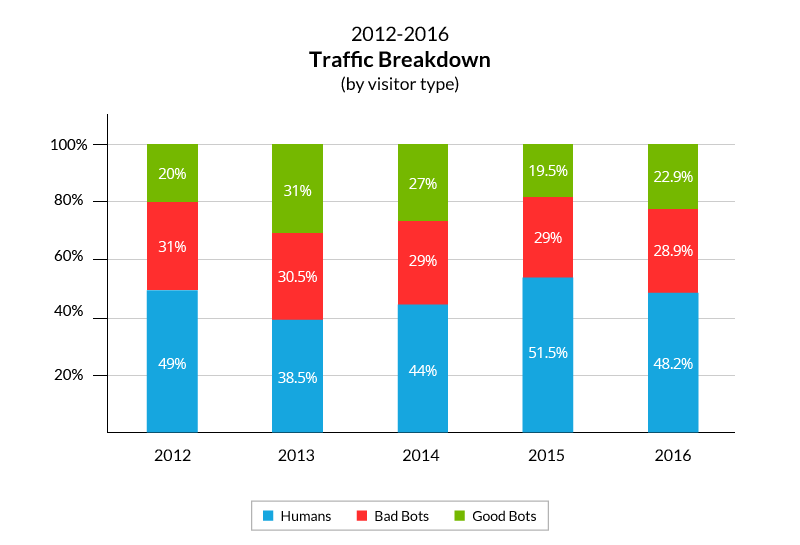

In 2015 we documented a downward shift in bot activity on our network, resulting in a drop below the 50 percent line for the first time in years. In 2016 we witnessed a correction of that trend, with bot traffic scaling back to 51.8 percent—only slightly higher than what it was in 2012.

As evidenced by the graph above, this change is attributed to an increase in good bot activity. In 2016 we tracked 504 unique good bots—278 of which were active enough to generate at least 1,000 daily visits to our network. Of these, 57.2 percent displayed an increase in activity, while only 29.4 percent saw their activity decrease year-over-year.

This would have resulted in a bigger uptick, but for the fact that most (66.7 percent) of the changes were in the -0.01 percent to 0.01 percent range. The latter showcases the predictability of bot traffic, especially given the different nature of the compared samples.

This was also consistently echoed by the bad bot numbers, their activity continuing to fluctuate around the 30 percent mark—just as they have for the past five years.

Here it should be noted that the relative amount of bad bot visits (and bot visits in general) is higher to less trafficked websites. For instance, on the least trafficked domains—those frequented by ten human visitors a day or less—bad bots accounted for 47.7 percent of visits while total bot traffic amounted to 93.3 percent.

These disproportionately high numbers are the result of the indiscriminate activity by good and bad bots described in our previous reports, which will be further addressed in the final section of this post. Simply put, good bots will crawl your website and bad bots will try to hack it regardless of how popular it is with the human folk. They will even keep visiting a domain in absence of all human traffic.

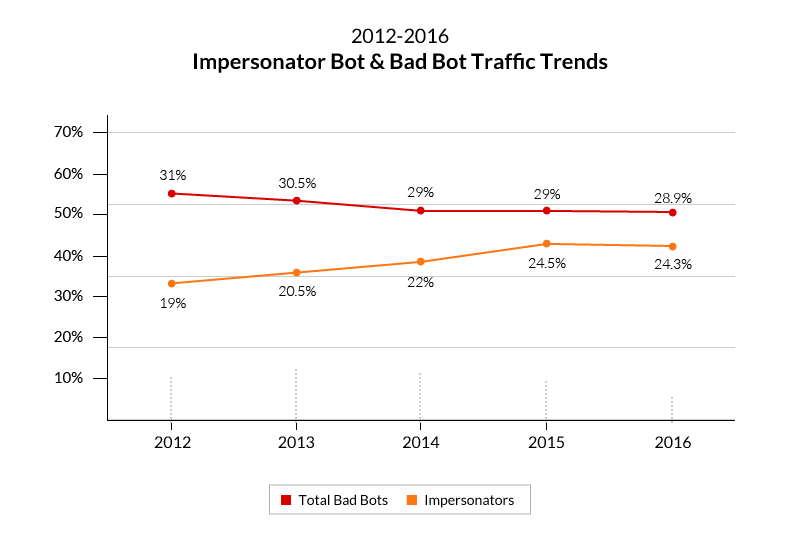

Impersonator: The Textbook Bad Bot

In the bad bot category—for the fifth year in a row—impersonator bots continue to be the most active offenders. In 2016 they were responsible for 24.3 percent of all traffic on our network and 84 percent of all bad bot attacks against Incapsula-protected domains.

This consistently dominant position is the result of the following two factors:

1. Impersonation is easy and worthwhile

Impersonators are attack bots masking themselves as legitimate visitors so as to circumvent security solutions. Clearly such bypass capabilities complement all malicious activities and that makes impersonators the ‘weapon of choice’ for the majority of automated attacks. More so, because a rudimentary level of obfuscation is relatively easy to achieve.

Thus, the more primitive impersonators are simply bots that hide behind a fake ‘user-agent’—a HTTP/S header that declares the visitors identity to the application. By modifying the content of that header these attackers proclaim themselves as either good bots or humans, hoping that this would be enough to gain access.

More advanced offenders take things a step further by thoroughly modifying their HTTP/S signatures to mimic browser-like behavior—including the ability to capture cookies and parse JavaScript. In many cases they’re doing both at the same time.

Case in Point: The Ticketing Bots

Last December a nationwide law by the name of “Better Online Ticket Sales (BOTS) Act of 2016” made it illegal for anyone to use bots to purchase popular event tickets in a way that circumvents fair purchase rules.

Ticketing bots addressed by the legislation are a classic example of impersonator bots: non-humans programmed to mimic legitimate visitors while automating human tasks. Like the DDoS bots mentioned below, their actions are only made malicious by the scope in which they are used.

2. Impersonators are perfect for DDoS attacks

Impersonator obfuscation techniques are a perfect fit for what DDoS offenders are trying to achieve—overbear a server with a high number of seemingly legitimate requests. For example: asking it to load the home page 50,000 times a second.

Here lies the other reason for the massive amount of impersonators’ activities. Where a single bot visit (session) would suffice for another type of attack to succeed, a DDoS perpetrator needs to send out many thousands of bots to take down a target.

The most active bad bots are all impersonators used for DDoS attacks. One of these is the Nitol malware, the single most common bad bot responsible for 0.12 percent of all website traffic. In 2016 the majority of Nitol assaults were launched by impersonator bots browsing using older versions of Internet Explorer.

Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; SV1) Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; Trident/4.0; .NET CLR 1.1.4322; .NET4.0C; .NET4.0E; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)

Cyclone, the second most common bad bot, was often used to mimic search engine crawlers—predominantly good Google and Baidu bots.

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)

Another example is the Mirai malware, which was recently used to launch one of the most devastating DDoS attacks to date. When used in HTTP flood assaults, Mirai too employs impersonation tactics, such as masquerading as Chrome or Safari browsers.

Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.103 Safari/537.36 Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/601.7.7 (KHTML, like Gecko) Version/9.1.2 Safari/601.7.7

It should be mentioned that the identities used by impersonator bots are highly fluid. Case in point, when collecting data for this report we recorded Nitol impersonators using over 14,000 different user-agent variants and 17 different identities.

Feed Fetchers: Good Bots Going Mobile

There are numerous ways in which good bots support the various business and operational goals of their owners—from personal users to large multinationals.

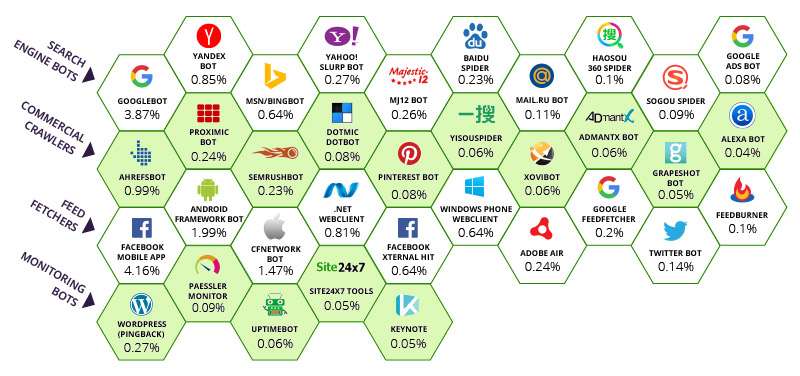

On a broader level, however, these can be categorized by the following four groups:

- Feed fetcher – Bots that ferry website content to mobile and web applications, which they then display to users.

- Search engine bots – Bots that collect information for search engine algorithms, which is then used to make ranking decisions.

- Commercial crawlers – Spiders used for authorized data extractions, usually on behalf of digital marketing tools.

- Monitoring bots – Bots that monitor website availability and the proper functioning of various online features.

Of the four groups, search engine bots are probably more well-known to the average internet user. However, they are not nearly as active as feed fetchers, the latter accounting for 12.2 percent of all 2016 traffic.

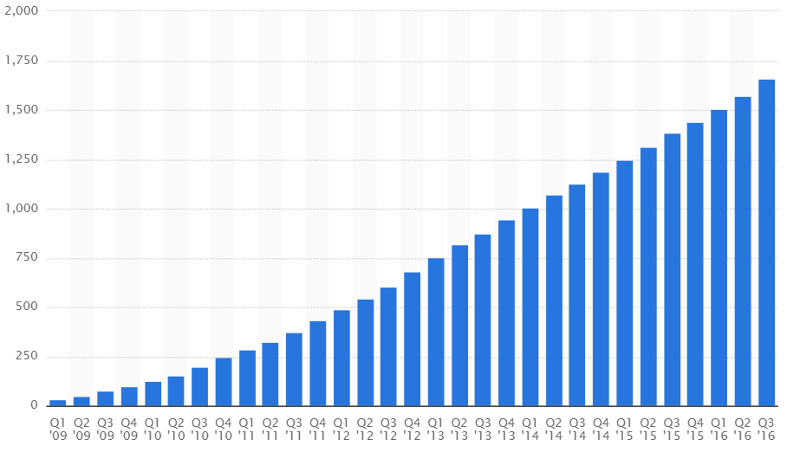

The most active feed fetcher—and the most active bot in general—was that associated with Facebook’s mobile app. It fetches website information so it can be viewed in the in-app browser. Overall it accounted for 4.4 percent of all website traffic on Incapsula network. This reflects the growth rate of Facebook’s mobile user base, which reportedly grew by 19 percent year-over-year and reached over 1.6 billion active monthly users in Q3 2016.

Humans Leading the Way, Bots Following Suit

Elevated mobile bot activity is the result of a larger trend—human behavioral shift moving ever more toward mobile browsing. For the first time according to StatCounter, its extent is such that in November 2016 mobile traffic outgrew desktop traffic, reaching 50.31 percent the following month.

Our own traffic reflects that shift. In 2016 we documented a 10.6 percent increase in mobile user website traffic, coupled with a 16 percent decrease in the number of desktop user visits. However, our sampling shows desktop users retained a majority, representing 55.39 percent of all human visits.

Android framework, the second most active feed fetcher, is similarly associated with mobile usage, being the agent of Dalvik and Android Runtime processes. So is the CFNetwork bot, the third most popular feed fetcher bot used in iPhone mobile apps.

Overall, bots associated with mobile apps and services account for over 69 percent of all feed fetcher traffic, serving to illustrate the effect of the mobile revolution on the bot traffic landscape.

94.2 Percent of Websites Experienced a Bot Attack

The most alarming statistic in this report is also the most persistent trend it observes: for the past five years every third visitor website visitor was an attack bot.

The implications of this trend are felt by many digital business owners, the majority of whom are facing non-human attackers on a regular basis. Specifically, out of 100,000 domains in this survey, 94.2 percent experienced at least one bot attack over the 90 day period.

Often, these assaults are the result of cybercriminals casting a wide net with automated attacks targeting thousands of domains at a time.

While these indiscriminate assaults are not nearly as dangerous as targeted attacks, they still have the potential to compromise numerous unprotected websites. Ironically, the owners of these websites tend to ignore the danger of bots the most, wrongfully thinking that their website is too “small” to be attacked.

It is this ostrich mentality that helps bot attacks succeed, motivating the cybercriminals to keep launching bigger and more elaborate automated assaults.

Methodology

The data presented herein is based on a sample of over 16.7 billion bot and human visits collected from August 9, 2016 to November 6, 2016. The traffic data came from 100,000 randomly chosen domains on the Incapsula CDN.

Year-over-year comparison relies on data collected for the previous Bot Traffic Report, consisting of a sample of over 19 billion human and bot visits occurring over a 90-day period, from July 24, 2015 to October 21, 2015. That sample was collected from 35,000 Incapsula-protected websites having a minimum daily traffic count of at least 10 human visitors.

Geographically, the observed traffic includes all of the world’s 249 countries, territories, or areas of geographical interest (per codes provided by an ISO 3166-1 standard).

The analysis was powered by the Incapsula Client Classification engine—a proprietary technology relying on cross-verification of such factors as HTTP fingerprint, IP address origin, and behavioral patterns to identify and classify incoming web traffic.

Learn more about bots

Try Imperva for Free

Protect your business for 30 days on Imperva.