Recently we introduced our advanced architectural topology for ensuring that traffic inspection and request logs are maintained within the region. This capability is especially beneficial to our customers who need to meet certain regional compliance requirements, as is the case in Australia and Canada. In these regions, strict data sovereignty laws are in place to ensure certain data, such as government information, remains within country borders.

With our mesh network topology, even if the traffic is scrubbed outside of that region in order to benefit from our large network capacity, the layer 7 traffic (still encrypted) transits within the Imperva network. Decryption and traffic termination then always happen within our points of presence (PoPs) in-region, and can meet data sovereignty requirements.

However, there are other benefits to the new architecture in terms of capacity and performance.

Background

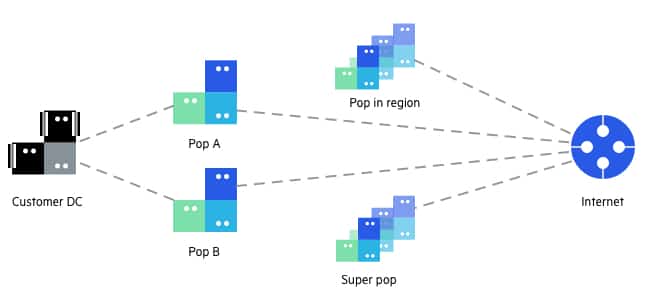

Previously, customers using our DDoS Protection for Networks had to create 2 tunnels per region toward our PoPs, with 1 tunnel toward a regional PoP and 1 tunnel toward super PoP. In case of a DDoS attack from multiple regions, all traffic flowed toward those tunnels via an ISP international backbone.

The ISP in the middle, though, may present a challenge if scrubbing is always done closest to the customer. In the case of a large-scale attack, there is still the ISP to consider, where if an attack is so large that it congests the ISP backbone, blocking the destination IP (null routing), and dropping all requests – including clean traffic.

This led us to consider: What would happen if traffic reaching the origin server from out of region instead had to travel through less ISPs and hops?

Attack Traffic and Clean Traffic Flow

Our mesh topology does just this, enabling us to anycast customer ranges from all PoPs in the region in addition to all our super PoPs located out of the region where the original tunnels reside. This helps to achieve two goals:

- Attacks on customer ranges can now be scrubbed closer to the attack origin, and don’t need to travel over the ISP backbone to the PoP in which the customer is connected to us, reducing the chance that ISP in the middle will null route the range.

- In an idle state, clean traffic can flow over our mesh topology, which is built over quality pipes for increased capacity and performance.

No matter where a customer sets their tunnel, traffic will reach the closest PoP in the region or the closest super PoP out of the region.

Performance Impact

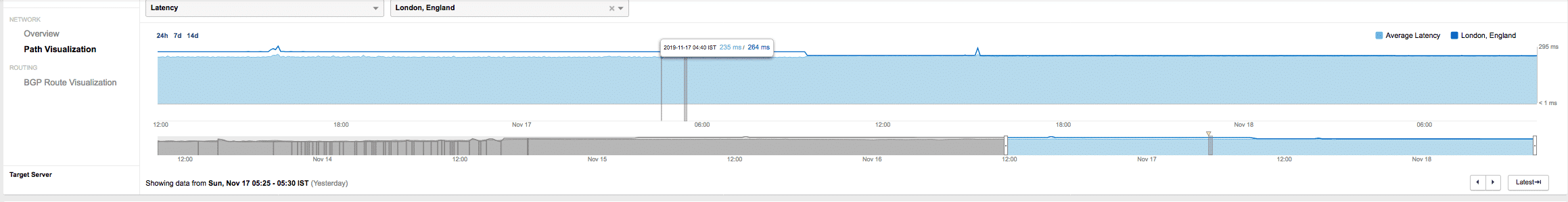

Taking a look at Europe, below, we can see a performance improvement of almost 30ms for London after changing from no-mesh to mesh.

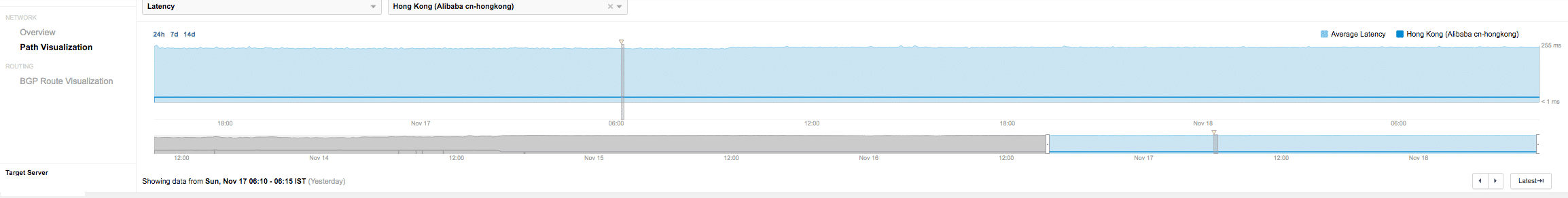

However, in APAC, below, there is no change, indicating that the route is already optimal.

Before & After

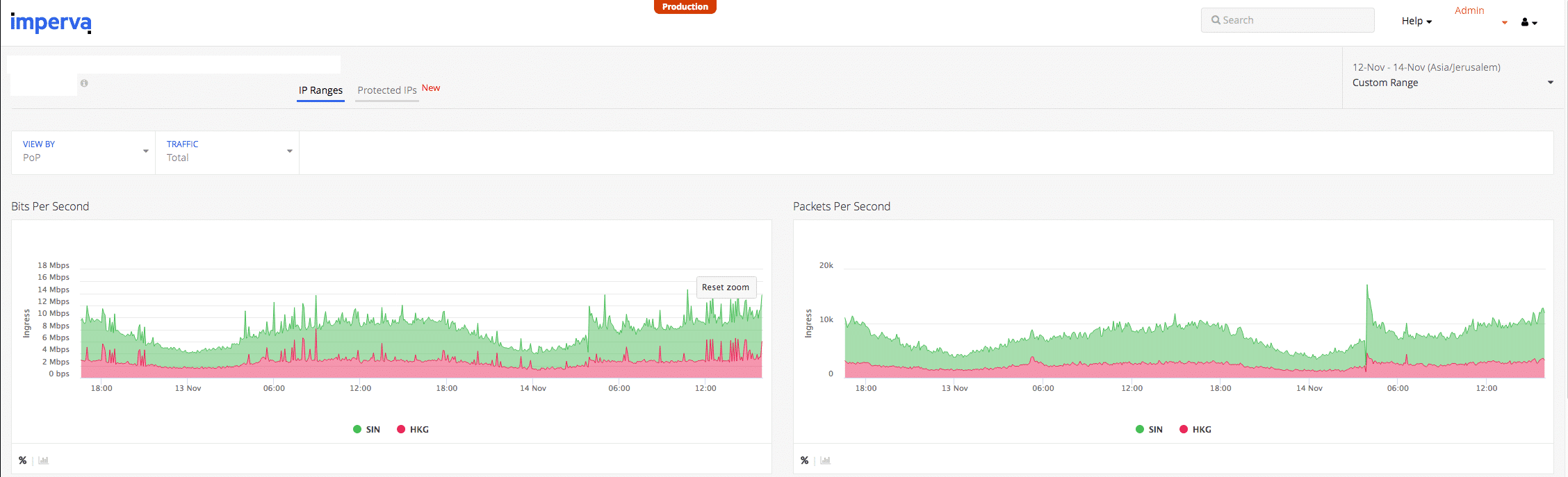

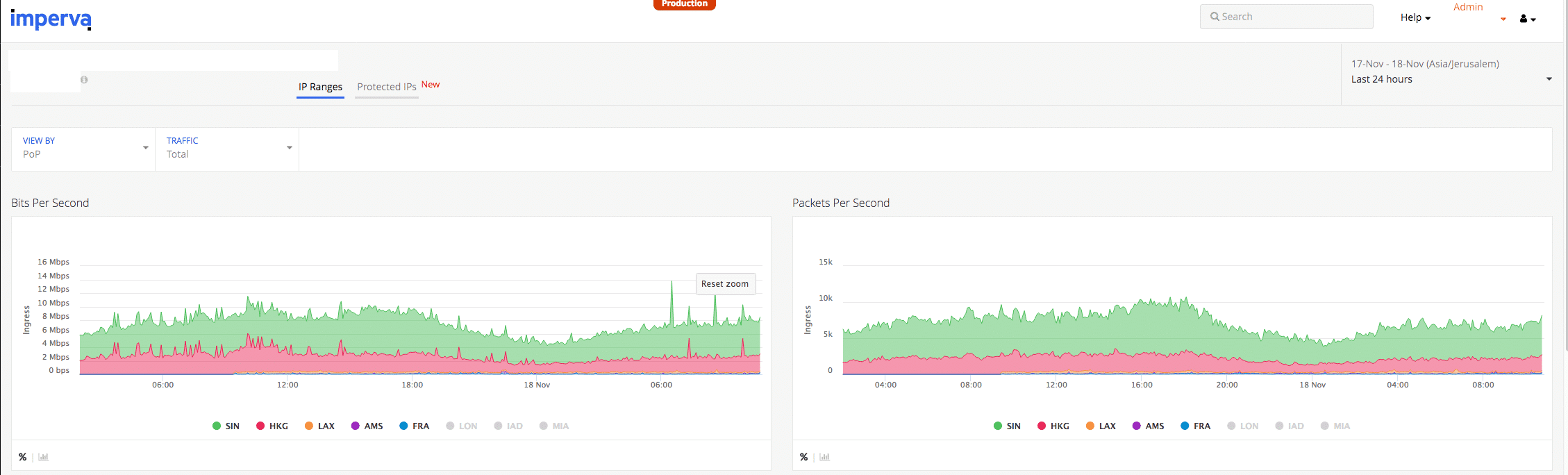

Here is a look at a customer dashboard before implementing the mesh, with traffic from 2 PoPs only, where tunnels are configured.

And here is the customer dashboard after implementing the mesh, showing traffic from multiple PoPs close to the user location (not just the PoPs directly connected to the customer). When traffic is meshed, all the PoPs that serve the origin server are now listed.

Routing Flexibility

In order to enable maximum flexibility, we can control per BGP session whether to anycast over mesh or not. The decision is based on several parameters, like actual performance improvement and type of connection (for example, for cross-connect customers we don’t want to anycast over mesh). Customers can configure a few connections and several BGPs and we can select which one of them is anycasted.

Try Imperva for Free

Protect your business for 30 days on Imperva.