The Widening Gap

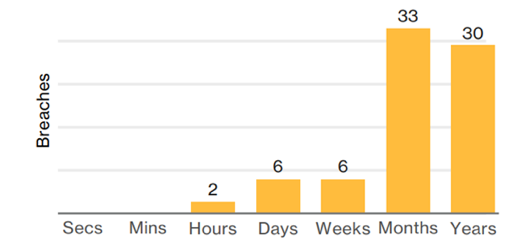

Data breaches by insiders are very challenging to catch. The gap between the rise of insider threats and speed of hunting them down is increasingly widening. According to 2017 Data Breach Investigation Report by Verizon, a great majority of insider and privileged-misuse breaches go undetected months or even years (Figure 1). To make matters worse, it has become progressively easier for hackers to gain access and masquerade as a legitimate insider. In April 2017, the largest aggregate text credentials file ever found on the dark web contained a database of 1.4 billion clear text credentials. The unencrypted, valid usernames and passwords, collected from a large number of sources including Netflix, MySpace, Badoo, Linkedin and more, give a pretty good starting point to the less sophisticated hackers.

Figure 1: Breach discovery timeline within insiders and privileged misuse, n=77 (source: 2017 Verizon Report)

In this blog post, we discuss research conducted by the Imperva Defense Center into common practices insiders use to infiltrate databases and introduce new detection techniques developed to detect suspicious database commands and access patterns. These new detection techniques, which are available in the latest version of Imperva CounterBrech, employ activity modeling to help organizations reduce the time to detect suspicious insider activity.

Stepping into the Attacker’s Mindset

Like any other thief, when an attacker wants to steal data from the database, he will try to cover his tracks in all stages of the attack—from reconnaissance to data exfiltration. To achieve this, he is likely to use multiple methods of operation in his leeway such as these examples,

- Using a dynamic SQL query with a digested content

- Injecting malicious code into the database

- Communicating with the database using a dedicated shell

Knowing which methods can be used by attackers to access databases and exfiltrate data is critically important for the threat algorithms. The Imperva Defense Center explored all these methods of operation by reverse-engineering pen-test tools. Combining the results with our domain knowledge, we created a list of commands and access patterns to the database that indicates a high probability of attack occurrence.

Once we had this list, we worked with dozens of databases from Imperva customers to understand how common these commands and access patterns are in regular day-to-day database activity. We used the audit data collected by SecureSphere and insights gathered from CounterBreach. This layered approach and domain knowledge of databases are what makes our algorithm unique and powerful.

Determining Suspicious Access to the Database

Based on our research, we discovered that we could divide these commands and access patterns into two main groups. The first group was commands and access patterns that had never been used in regular activities. Using these commands indicates suspicious activity with very high probability.

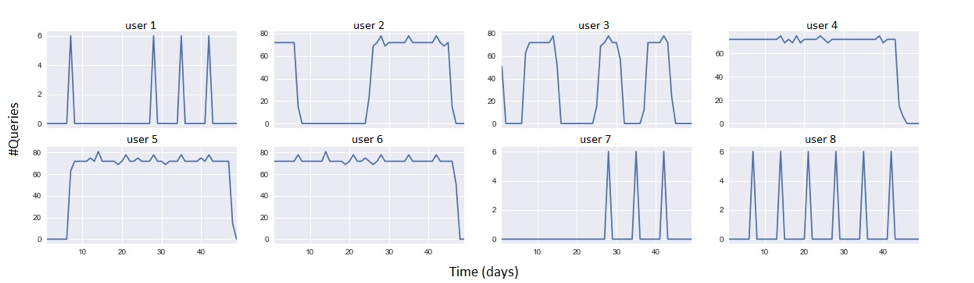

The second group of commands, although rare, was used by interactive users or applications in some cases. This group should be addressed with extra care since using these commands is not necessarily an attack. In the spirit of eliminating false positives, we further performed statistical inference and found that people in the second group would use a specific command repeatedly, and in a very predictable manner (Figure 2).

Figure 2: Suspicious command number of queries for different users

Positive vs. Negative Activity Model

This finding suggested using both negative and positive activity modeling would be required for the next step to determine suspicious activities. In many cases, the two approaches complement one another, and both are valid for detecting potential insiders. That said, negative activity modeling looks for suspicious activity that shouldn’t happen at all. This distinction can be further expanded while distinguishing between activities that should not occur at all among all users and very rare activities among the vast majority of users. Both the first and second groups of commands and access patterns we discovered were classified through this modeling.

Positive activity modeling, unlike the negative counterpart, profiles the day to day activity. It profiles each user activity, the activity of groups with similar characteristics or interest (i.e. peer groups), and the entire organization’s activity. Behavioral characteristics used for profiling include the access pattern to the data, data stores the user usually accesses in the organization, hours of access, amount of data retrieved, and much more. Once an activity model for each user or group is built, we can detect activity which is suspicious and diverges from the relevant profiling. We applied this model to the second group of commands and access patterns.

In the classic case of suspicious commands as seen in the first group, using the negative activity model suffices (i.e. pure negative activity modeling). In the more complex case of rarely used commands, such as the second case, a hybrid approach of combining the two models is needed (i.e. combined negative activity modeling). The negative activity model needs domain expertise while the positive activity model requires machine learning algorithms, which minimizes false positives.

Detecting the Actual Attacks: Not Theoretical, But Practical!

When we applied these detection techniques to real-world customer databases, we uncovered an attack on an Imperva customer. Our research uncovered that the same user in the customer organization used two suspicious commands on the day of the attack.

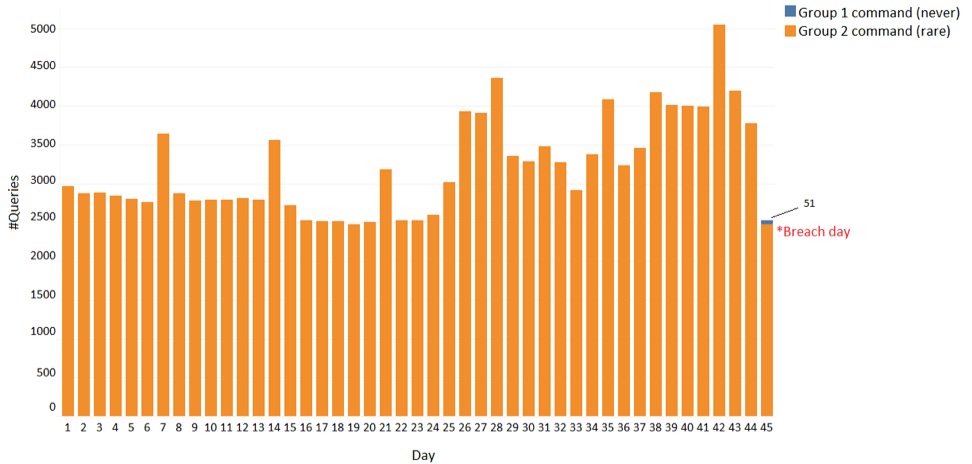

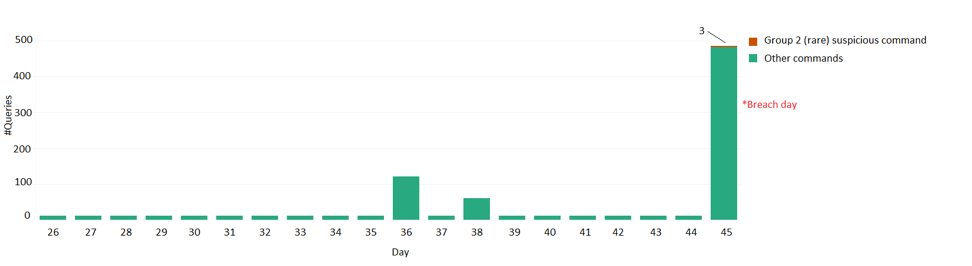

Figure 3 shows the distribution of these two suspicious commands across the entire organization. The first command (blue in the graph) is a suspicious command that was never used in the organization before. We detected this incident using the pure negative activity model. The second command (orange in the graph) belongs to the group of commands which are rare but have been used by some of the users. At this point, we could not yet make any conclusions for this group of commands.

Figure 3: Distribution of two suspicious commands across the entire organization

We further applied the positive model to the second group of commands to uncover the fact that this command was indeed suspicious for the user that performed the attack. An examination of the profile that was built for that user indicated the unacceptableness of this action. Figure 4 shows that this rare and dangerous action was never done before by this user.

Figure 4: Rare suspicious command caught by user profiler

Conclusion

In a world where the amount of data breaches increases every day while the discovery of insider and privileged-abuse breaches can take months or even years, there are numerous challenges in uncovering these threats faster and stopping them before any data is exfiltrated. As attackers become more and more sophisticated, our mission is to be able to uncover their tracks and stay one step ahead of them at all times. A combination of both the negative activity model, which requires domain expertise and the positive activity model, which in turn requires machine learning algorithms, is required to accurately detect attacks while keeping false positives to the minimum.

Try Imperva for Free

Protect your business for 30 days on Imperva.